Open AI/GPT-2 Security – Towards an information crisis?

On 6th January 2017, U.S. Intelligence released a conclusive article on interferences by the Russian government during the 2016 U.S. elections, highlighting potential signs of misinformation that could have led towards Trump’s election win. During subsequent months a battle against fake news erupted in the media, with journalists and political figures blaming each other for the information accuracy.

Fake news also targeted the economic sector directly. In China, the “plastic found in seaweed rumor” spread on the WeChat messaging application, leading to a US$ 14.7 million loss for seaweed producers and the arrest of 18 people.

With the rise of Internet technologies (and the adoption of social media), information is relayed much faster than before, using multiple channels. Rumors can now spread extremely quickly and have a disastrous impact.

While countries are creating new protection laws, the merging of AI technologies such as Deepfake, Descript or OpenAI/GPT-2 will make “fake news detection” more difficult. To reduce the impact on these “fake kiddies”, governments need to adjust their countermeasures.

What’s an opinion?

I often debate political issues with my friends. The reality is that I can sometimes be right, sometimes wrong, or at least I feel that way, shall I say.

Opinions are always subjective: what I think is wrong might be “less wrong” to someone else or even considered as “right” by others, depending on our values/ethics/experience/personalities and many other factors.

However, while all humans are different in many aspects, we also share common values that are likely going to be more prevalent like within a closed circle of friends.

So why are we often debating and not heading towards the same conclusion/output?

| Opinion, decision making process and propaganda Decision making process has been analysed through numerous studies. Decision making is a complex process that can be influenced by some parameters. As articulated on the Inquiries Journal website:There are several important factors that influence decision making. Significant factors include past experiences, a variety of cognitive biases, an escalation of commitment and sunk outcomes, individual differences, including age and socioeconomic status, and a belief in personal relevance. These things all impact the decision-making process and the decisions made. |

According to the encyclopedia Britannica, the social environment, mass media, interest groups and opinion leaders are the key influencers of an opinion.

It also defines propaganda as: the dissemination of information—facts, arguments, rumours, half-truths, or lies—to influence public opinion. This manipulation of information, leading towards controlling human behavior (often to influence economic or political decisions) has been contributing to some horrors in the past, and, as Joseph Goebbelswould say: “It would not be impossible to prove with sufficient repetition and a psychological understanding of the people concerned that a square is in fact a circle. They are mere words, and words can be molded until they clothe ideas and disguise.”

Internet News, Groups and Social Media as new influencers

Living in the digital world, it is not difficult to understand that most of (if not all) parameters that are key influencers of an opinion are today accessed (at least for the Generation X onwards) mainly through Internet services. These services filter, list, classify and decide on how the information will be displayed. As information transit faster, and information can be edited by almost anyone on the internet, it is easily understandable that we are seeing more and more “fake news” in the past few years on these new platforms.

Fake news and AI

Open AI/GPT-2 – The “Fake News” engine

I recently came across the GPT-2 software from Open AI. Open AI is a non-profit firm and research laboratory based in San Francisco whose mission is to make artificial intelligence frameworks available to everyone. Its GPT-2 product is a large transformer-based language model with 1.5 billion parameters, trained on a dataset of 8 million web pages. GPT-2 has been trained with only a single objective: predicting the next word.

In other words, Open AI created an AI algorithm that has been fed with a really large amount of data. The AI program “learned” through this information how words are assembled and aim to predict the next word. The algorithm has been so successful that each generated word serves as input for the next generated word finally creating a readable sentence.

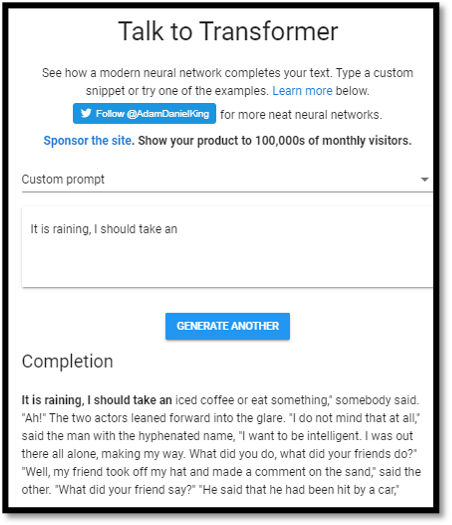

If I feed the AI program with, as an example, the following:

“It is raining, I should take __” and AI should complete the sentence with: “umbrella” or something that would make sense.

The OpenAI team has already created and made available a web interface to test GPT-2. See https://talktotransformer.com/

As shown in the screen captures below, if I query GPT-2 with the sentence, I can observe a couple of unexpected results:

Here is the text version of the outputs:

- It is raining, I should take an iced coffee or eat something,” somebody said. “Ah!” The two actors leaned forward into the glare. “I do not mind that at all,” said the man with the hyphenated name, “I want to be intelligent. I was out there all alone, making my way. What did you do, what did your friends do?” “Well, my friend took off my hat and made a comment on the sand,” said the other. “What did your friend say?” “He said that he had been hit by a car,”

- It is raining, I should take a iced coffee to stay awake!” he said, “what’s a crime anyway? I give you what you deserve.” He rang the bell on the outside of the motel and bolted out the door.

He looked at the clock and, seeing that it was 10:01, said, “Watch this asshole go!” He pounded the kitchen door with his fist and yelled, “Arrest him!”

“Why?” “I don’t know, he’s a little troubled. I am the one who told him to leave his weapons.

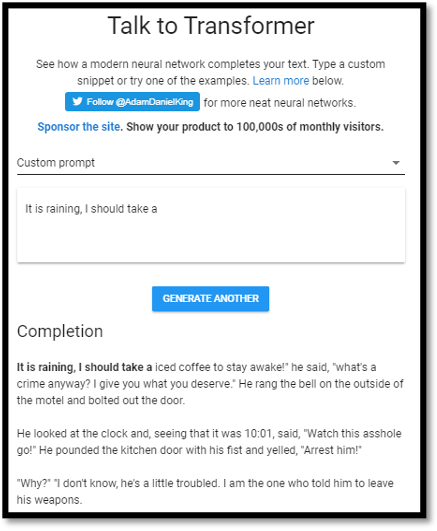

While it looks like the AI algorithm has been fed with romans and other literature, the output can become stranger, even darker as shown below:

It is raining, I should take a iced drink, The last time I had that I am sure I drove a broom through your face, And I wanted you to start cleaning your gut, And I want you to come back I want to kill you, I want to destroy you And I want you to leave my house, please. If you ever want to tell me the truth I hope you burn your house down, this is for real, I had a dream about killing you, I was on the phone with Angela during the crime, I kept telling her that you should take this

While I didn’t over-explore the possibilities of the tool, it is noted that the output:

- is much more complex that I would have expected

- is closer to written English literature than spoken English language

- is generated extremely quickly (less than a second maximum for each paragraph)

- can significantly differ even with the same input

Open AI/GPT-2 – Creating a fake news

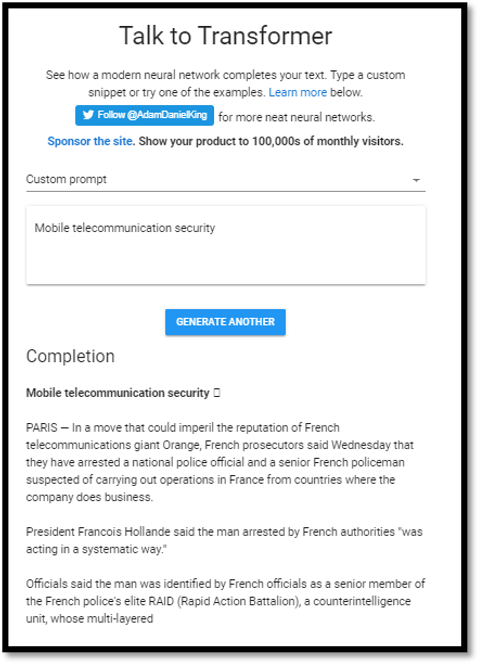

I started to think a bit more on how this tool could be exploited and remembered some press releases talking about potential fake news and AI. Recently working in the Telecom industry, I was then thinking, let’s add “Mobile telecommunication security” as an input and see.

I then generated the output a few times and got the following:

Open AI Transcript:

“PARIS — In a move that could imperil the reputation of French telecommunications giant Orange, French prosecutors said Wednesday that they have arrested a national police official and a senior French policeman suspected of carrying out operations in France from countries where the company does business.

President Francois Hollande said the man arrested by French authorities “was acting in a systematic way.”

Officials said the man was identified by French officials as a senior member of the French police’s elite RAID (Rapid Action Battalion), a counterintelligence unit, whose multi-layered missions often included a focus on terrorism.

The raid’s result was a priority target, so an intensive intelligence operation was carried out to ensure that a terrorist attack in France was prevented,” said President Hollande’s office.

The Paris prosecutor’s office also said the man’s name was Francois-Xavier Bonin, 57, a French national born in 1978.”

I’m really impressed at how credible the generated text is. Firstly, the entire paragraph make sense and is well written. It is also really realistic: mixing fake news with real information Hollande was really a French president; Francois Xavier Bonin sounds like a real French name; born in 1978 would be about ~35 years old during Hollande’s presidency, so a credible age for a terrorist.

However, looking at it closer, you could spot a couple of issues: Rapid Action Battalion is not a French term (French usually use French words rather than English for acronyms). There is actually a definition for RAID in French, but it stands for “Recherche, Assistance, Intervention, Dissuasion “. Rapid Action Battalion actually exist and is an elite anti-crime and anti-terrorism unit of the Bangladesh Police. This kind of trivial errors is giving away some indication to the audience that an AI program wrote this text.

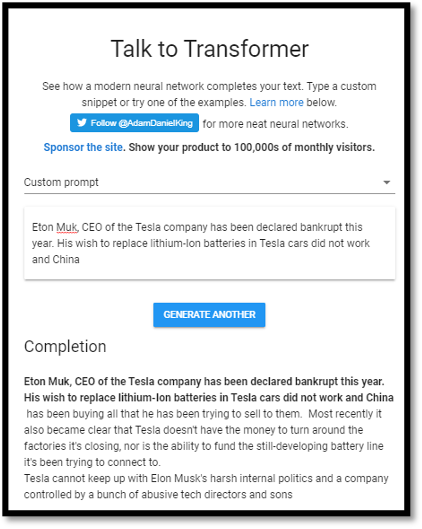

Open AI/GPT-2 – Creating a targeted fake news step by step

One of the ways to quickly create a fake news would be to follow a small number of steps:

- Targeting a person: e.g.: Eton Muk (note the deliberate spelling mistake)

- Adding a hoax. e.g.: bankrupt

- Mixing with real information: Tesla company, Lithium Ion

- Finalising by checking the generated output and potentially liaising sentences together

Example: Let’s input the following sentence to the AI model: “Eton Muk, CEO of the Tesla company has been declared bankrupt this year. His wish to replace lithium-Ion batteries in Tesla cars did not work and China”

Open AI Transcript:

Eton Muk, CEO of the Tesla company has been declared bankrupt this year. His wish to replace lithium-Ion batteries in Tesla cars did not work and China has been buying all that he has been trying to sell to them. Most recently it also became clear that Tesla doesn’t have the money to turn around the factories it’s closing, nor is the ability to fund the still-developing battery line it’s been trying to connect to.

Tesla cannot keep up with Elon Musk’s harsh internal politics and a company controlled by a bunch of abusive tech directors and sons.

If you are a shareholder of Tesla corporation you might be eligible for some refunds. Please contact Tesla’s lawyer at MyshareInfo@lawsuite.com for more details.

The last part was added manually to show how easily this could be exploited to perform a phishing attack. I let you imagine the security implications in coupling this GPT-2 engine with a good chatbot for doing some real time social engineering/phishing. For info, you can see that Eton Muk got later replaced by Elon Musk indicating that this algorithm has been fed with Elon Musk information.

Other applications – Fake news for audio and video

Fake audio:

The Descript software developed by the company Lyrebird allows a user to clone a voice based on data samples (here and here). The amount of information needed to train the algorithm has been drastically reducing over the years making it possible to create a cloned voice with minimal amount of data.

Originally conceived to easily create editable podcast, this technology is restricted to creating voice over text of your own voice only. However, if diverted to imitate others’ voice, this technology could become a potential security threat that would increase social engineering and biometric (voice recognition) type of attacks.

Fake video:

Deepfake type of software uses artificial intelligence to allow a user to create fake videos of someone by substituting faces. This could also be used for generating fake news. A famous imitation of Barack Obama from Jordan Peele has been creating the buzz on YouTube with more than 7 million views. It’s only a question of time before fake videos are getting used for political, economic or social rumours (e.g. fake porn videos used for social revenge).

Fake news countermeasures

New protective laws:

Numerous countries such as China and France have created new protection laws to censure fake news, making it illegal. While this type of initiative can reduce the impact of fake news nationally, this might not be as effective outside their jurisdiction, or in an international context (e.g. a user creating a fake Chinese news outside of China is likely not to result in a lawsuit).

Education:

Another initiative, currently being tested, has been to build a special education program that develop critical-thinking skills. This education program is, so far, showing extremely promising results to fight back against fake news. However, there is a strong concern on its ability to keep up with future “fake news” and their fast-paced development.

New technologies – AI against AI

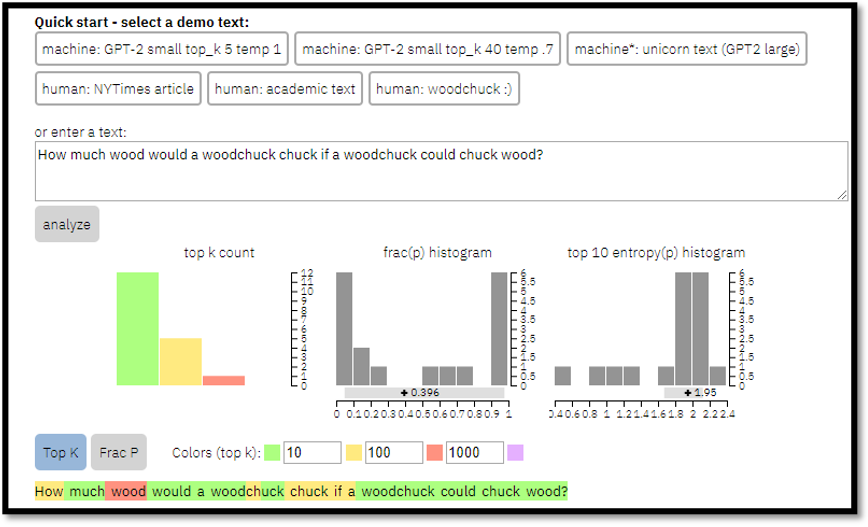

The GLTR (Giant Language Model Test Room) developed by Harvard University and IBM has been developed to determine if a text has been written by a language model algorithm. Since there are several AI-based tools that have been designed to create fake news, this software will help in identifying such content.

An example with the default GLTR text is shown below:

Each text snippet is analysed to determine how likely each word would be the predicted word. The principle is really simple: if the actual used word is in the Top 10 predicted words, the background is colored green, Top 100 in yellow, Top 1000 in red, otherwise in purple.

Unfortunately, due to the unavailability of some functions of this tool at the date of writing this article, the technology couldn’t be properly assessed. However, it is worth noting that the process to identify if the text was “human written” or “AI written” took a long time.

Conclusion:

In 2016, the world woke up to a “fake news” crisis. Since then, we are seeing numerous new AI technologies that will likely exacerbate this issue, making it more and more complex to distinguish “real news” from “fake news”. Jordan Peel’s video10 imitating Barack Obama on Deepfake should be seen as a real warning for the international community on how powerful and destructive these new technologies can be.

Looking at existing countermeasures, “protection law” against “fake news” is a reactive measure that is unlikely to be efficient against ‘fake news” outside local jurisdictions.

On the technology side, “AI fake news detection software” is extremely immature. The one I tested was extremely slow and seems to be losing the race against “AI fake news creation software”.

Finally, educational measures seem to provide, so far, the best results but come with a significant cost and are unlikely to be sufficient enough to identify future “fake news” that increase in sophistication every day.

Looking at this outlook, the best way to reduce the impact of “fake news” on our political, economic and social systems is likely going to require a combination of initiatives. Firstly, new state-of-the-art tools for detection of fake news patterns and content need to be created. These tools will also need to evolve and learn constantly to keep up with the fast evolution of fake news creation technologies. Secondly, a vast educational program needs to be developed. This educational program would provide awareness to the population against these new types of threats. Finally, these initiatives would need to be governed through an independent international ethical committee that would provide a heuristic analysis on the accuracy of the information while respecting one of our most important rights: the freedom of speech.

Siegfried Lafon

Siegfried Lafon (Sieg) is a Security Senior Manager in Accenture Security Technology Consulting, based in Australia, with over 15 years of extensive experience in the Security field. Sieg is a well-recognised Solution architect with a wide technical level of knowledge across different security domains (IAM, Cloud, Application Security, Information Security policies and processes, Strategy and Cyber Defence) and is passionate about futuristic technologies, innovation and ideas. He loves probabilities, chess, surfing, camping and is an advocate for X-cultural diversity.