It cannot have escaped you that the word trustworthy has been added to the cybersecurity lexicon in recent years: trustworthy AI, trustworthy ML, trustworthy IoT and so on. Indeed, parallel to the cybersecurity hype we have seen trusted computing again start to come to the fore, along with associated topics such as attestation and confidential computing.

Unfortunately, just saying a word, or adding it as an adjective into a sentence describing a product or technology does not add value, at least not outside the advertising domain.

In this article I wish to open the discussion about what these terms mean and how we should implement cybersecurity technologies to support these concepts. I should point out that there does exist work, for example, IETF’s Remote Attestation Technical Specification and the Trusted Computing Group provide definitions, implementation technologies and reference architectures – we do not contradict these but aim to develop a more ontological description that these other definitions (at differing abstraction levels and purposes) can be mapped into.

Actions to Obtain Trust: Measurement

Firstly, a little history and some grounding in something existing. When trusted computing is brought up it invariably leads to a conversation about the Trusted Platform Module (TPM) – a passive device capable of storing information securely, generating keys and performing some cryptographic operations. Typically seen in [UEFI] x86 systems (and now some ARM SoC systems), it provides a mechanism for

establishing identity, authenticity and storing integrity measurements. When utilised properly through measured boot (and some run-time integrity mechanisms) it provides an extremely powerful way of preventing and mitigating firmware, boot-kit, configuration, and supply-chain attacks. The TPM is one of many mechanisms for establishing and reporting identity and integrity of a system – do not confuse TPM

with trusted computing as a concept.

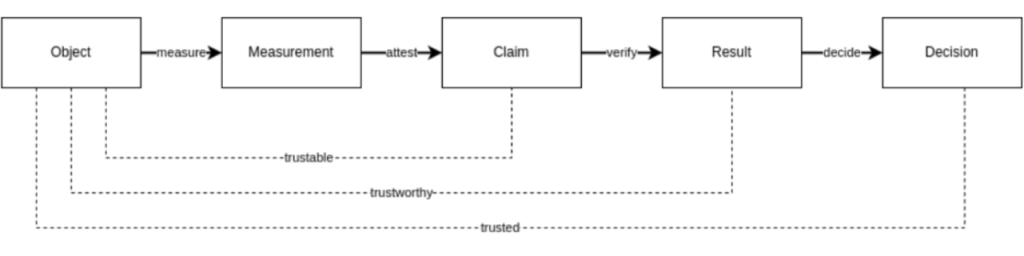

The best way to understand a system is to look at how the parts interact and behave together. If we wish to decide whether a system is trusted (or even trustworthy), we need to define the individual actions that must take place to do this and how these actions relate. The diagram below shows this structure:

Let us start with measurement – the process by which we extract a description of some object that we wish to trust. In a UEFI x86 measure boot, a measurement (incidentally recorded in the UEFI eventlog and the TPM’s PCRs) is made of the firmware, boot loader, secure boot key databases and firmware and hardware configuration, and sometimes the actual operating system kernel, hypervisor, libraries etc.

These measurements are invariably cryptographic hashes of those structures. Other objects might provide other kinds of measurement, for example, containers should come with their SBOMs (software bill of materials).

One property of a measurement is that it should be cryptographically verifiable and linkable back to the object providing that measurement. UEFI does this by first establishing an untamperable core root of trust, and then building a chain of trust from there using cryptographic hashes.

Attestation and Verification

Now we have measured an object and can now perform the next action, which is attestation. The term attestation can be applied to the entire process, but it is good to really clarify the actual action. Attestation is the process by which measurements are extracted and presented in a form where they can be analyzed or verified. A TPM for example produced quotes which contain information about a set of measurements in the PCRs, meta-data about the state of the machine and a cryptographic signature made from an attestation key binding that quote to the device that produced it. Other kinds of claims produced by attestation are logs files, but these need to be cross-referenced against the TPM, e.g., the Intel TXT log, the UEFI eventlog, the Linux IMA filesystem integrity and so on.

The next action is to verify the claim to produce a decision – we simply ask the question: does this claim match what I am expecting? Using our TPM quote example again, this is broken down into a set of Indvidual questions: is this a quote? Does it match its cryptographic signature? Does the attested value match the expected attested value? Is the state (clock, reset/reboot/safe counters) good?

How to Decide?

If these questions are answered in the affirmative then we have a positive decision, otherwise the object is not trusted. This process is typically made by an [remote] attestation server – the results of which should be communicated to other parts of the system and utilised in their behaviours. For example, a server not being trusted might imply that its network connection is restricted, triggers an update, or even affects any workload management mechanism as we might see in container-based systems.

Now that we have a decision, we can extend this decision process more and consider what being trusted means. As you might have suspected there is more than one set of claims possible for an object and that these claims might be related somehow. Then there is the question about whether a system containing many objects is still trusted if one or more parts fail their trust decision.

Furthermore, we might even have differing levels of trust in a single system. For example, I can boot my laptop in 3 ways: measured boot (SRTM measures), measured boot with Intel TXT, and then (in some cases) with or without secure boot being active. If I boot with TXT then I can only trust the core root of trust measure and the kernel measurement; if I do not use TXT then I can only trust up to the point where the firmware hands control over to the kernel. Which is the more trustworthy?

So now we have our four actions that we must consider when establishing trust in a system:

- measure

- attest

- verify

- decide

In later articles we will talk more about these processes, but for now we will address some adjectives: trustable, trustworthy, and trusted.

- A trustable object as one on which we can perform attestation. Remembering of course that the claims produced by attestation must have properties that link then to the object producing them through attestation.

- A trustworthy object is one that we can verify. An object where we have refence, golden or expected values by which we can compare.

- A trusted object is one where a [positive] decision can be made.

In conclusion, by giving some meaning to these terms and relating them together we provide ourselves with a framework in which we can properly discuss notions of trustworthiness and trust.

A Few Final Points

Let us now then return to a point raised at the beginning of this article – when someone talks about, say, trustworthy AI what does this mean? I will leave this as more of ana academic exercise for the reader, but, if I have a piece of software (or system if we include the hardware it is running on) that is running some AI algorithm, what will make it trusted. How are measures taken? How are these attested and

verified? What criteria make up the decision to state that it is trusted?

We have interchangeably used the terms system and object in this article – systems (or objects) are made from other interconnected systems (or objects). How does trust in one system affect trust in another system?

Finally, we have a proverbial elephant in the room: where does trust come from? Who is the trusted authority from which all of this derives…. until next time.

Ian Oliver

Dr Ian Oliver is a Distinguished Member of Technical Staff at Bell Labs working on Trusted and High- integrity Cyber Security applied to 5/6G telecommunications, Edge and IoT devices with particular emphasis on the safety-critical domains, such as future railway and medical systems. He holds a visiting position at Aalto University Neurobiology Dept working on cyber security and medical application as well as lecturing on the subject of medical device cybersecurity. He is the author of the book “Privacy Engineering: A data flow and ontological approach” and holds over 200 patents and academic papers.

Leave a Reply