Finding the butterfly – the challenge of AI standards

The flap of a single butterfly’s wings can set off a storm thousands of kilometres away, or so says chaos theory. A few years ago the role of chaos theory in software development was pushed to the front of the R&D queue and moved beyond its initial home of meteorology and into a set of fields where it was not suited but rather than accept that it got made to fit and the simplicity of the theory got lost in the hype. But which butterfly? Will AI solve the unsolvable? Is AI going to be the magic tool that solves the world’s problems or is it a malicious force out to end the role of mankind? Obviously neither of these but striking a balance between enslavement to the age of the machine as seen in dystopian fiction and harmonious use of tools is going to be difficult.

There is even more hype and concern over AI than probably any other recent software development, and a similar rush to apply AI to anything and everything. AI can be dismissed as just software but it shouldn’t be and this is a key issue of the novelty and danger of AI. But AI, like any form of intelligence, is best used with a degree of caution. As stated by Alexander Pope “a little learning is a dangerous thing“, which is often misquoted as “a little knowledge is a dangerous thing” and it is this that is at the root of many of the problems of AI. Machine Learning (ML), is the basis of much of what is practically observed in the AI domain and in the most crude of terms makes correlations out of data to predict what’ll happen next. Applying some feedback the correlations get improved to make the predictions more and more accurate over time. The ML engine, the learning bit or algorithm, that determines what model fits best to the observed data is the complex bit and it needs a lot of data points to get right.

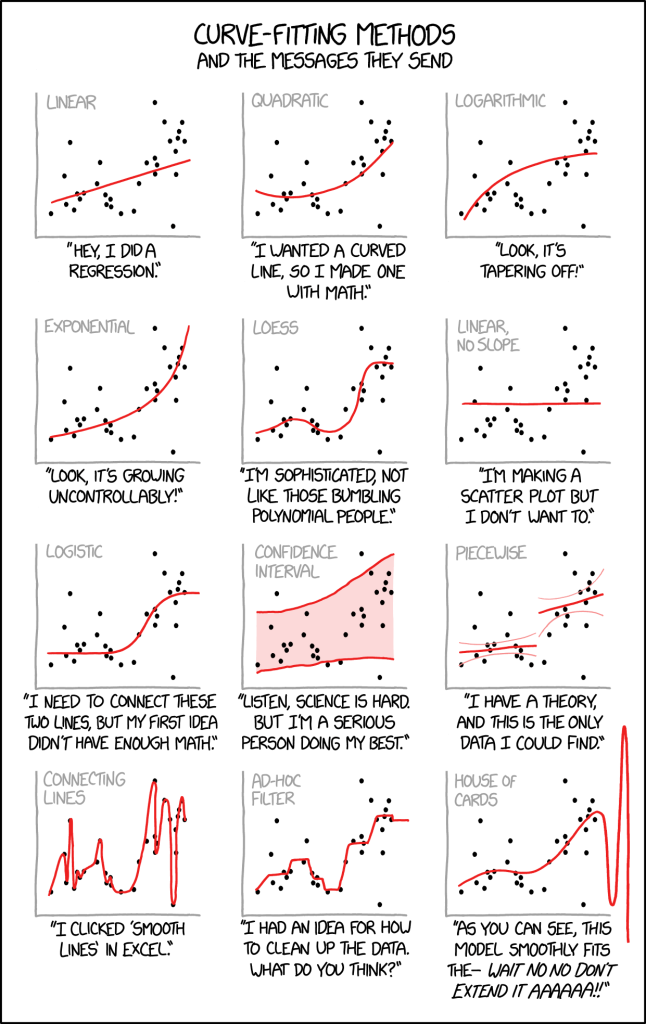

If the cartoon from xkcd below tells us anything it is that curve fitting and gaining knowledge out of data is often going to be difficult (note that every curve in the cartoon sort of fits to the same data). In some respects ML has similar risks to the views of Mark Twain (who attributed it to Benjamin Disraeli) that “There are three kinds of lies: lies, damned lies, and statistics” in that it would not be unreasonable to suggest if the same was to be written today it would suggest there are four kinds of lies: lies, dammed lies, statistics, and computer intelligence. The problem is in choosing the right data but that depends on the result that’s desired. The wrong data skews the result and deliberately using skewed data to back up a result is, as suggested by Twain, a lie.

In order to solve a problem like AI it is essential to assess what the problem really is. Furthermore if we want to apply technology as part of the solution we need to know what technology is needed. The debate around problems and solutions has to be rational too. At ETSI there has been growing acceptance that standards will play a role in the solution and this has been rapidly reflected across many other SDOs. So here we will summarise the activity of ETSI to date, and highlight some of the actions we will be taking over the coming months and years to contribute to the effective, safe, and secure use of AI as a tool for human endeavour.

When ETSI started its focus on AI as a generic tool, as opposed to a very specific tool for network management and optimisation, it began by identifying a few core problems to be researched and in turn documenting the security problems of AI, an ontology of AI threats, a review of mitigation strategies to AI specific threats, and a study of the supply chain of AI.

In broad summary ETSI GR SAI 004, “Problem Statement”, describes the problem of securing AI-based systems and solutions, with a focus on machine learning, and the challenges relating to confidentiality, integrity and availability at each stage of the machine learning lifecycle. It also describes some of the broader challenges of AI systems including bias, ethics and explainability. A number of different attack vectors are described, as well as several real-world use cases and attacks.

The problem statement led directly to ETSI GR SAI 005, “Mitigation Strategy Report”, which summarizes and analyses existing and potential mitigation against threats for AI-based systems as discussed in the Problem Statement. The purpose of the document is to give a technical survey for mitigating against threats introduced by adopting AI into systems. The technical survey sheds light on available methods of securing AI-based systems by mitigating against known or potential security threats. It also addresses security capabilities, challenges, and limitations when adopting mitigation for AI-based systems in certain potential use cases.

At a very slight tangent in recognising the role of data in ML systems that compromising the integrity of data has been demonstrated to be a viable attack vector against such systems the group developed ETSI GR SAI 002 “Data Supply Chain Security”. This document summarizes the methods currently used to source data for training AI, along with a review of existing initiatives for developing data sharing protocols, and from that analysis provides a gap analysis on these methods and initiatives to scope possible requirements for standards for ensuring integrity and confidentiality of the shared data, information and feedback. It is noted that this report relates primarily to the security of data, rather than the security of models themselves which are addressed in a later report.

The report on data supply chain security opened up the role of techniques for assessing and understanding data quality for performance, transparency or ethics purposes that are also applicable to security assurance. This addresses the aim of an adversary to disrupt or degrade the functionality of a model to achieve a destructive effect. This is to some extent strengthened by the content of ETSI GR SAI 001 “AI Threat Ontology” where an AI threat is defined in order to be able to distinguish it from any non-AI threat. The model of an AI threat is presented in the form of an ontology to give a view of the relationships between actors representing threats, threat agents, assets and so forth. The ontology described applies to AI both as a threat agent and as an attack target. Aspects of understanding intelligence are addressed by the document, as is the use of ontologies in data processing itself. It is noted by the report that many frameworks of cybersecurity are ontological in nature without being explicitly expressed in an ontological language.

Work from this set of reports has led on to addressing “The role of hardware in security of AI” in ETSI GR SAI 006, and in ETSI GR SAI 009 the “Artificial Intelligence Computing Platform Security Framework”. Together these two address the role of hardware, both specialized and general-purpose, in the security of AI, and the extension of hardware by its operating software in building a platform for AI, and this is being further developed into normative provisions to enable a secure computing platform for AI in ETSI GS SAI 015 which is expected to be published in Q1-2024.

A key element of AI, identified in many of the developing legislative instruments, is transparency and explicability (or explainability in more American use of English). This has been addressed now across a number of studies including ETSI GR SAI 007 (Explicability and transparency of AI processing) which takes a simplified view of both static and dynamic aspects by ensuring a developer is able to demonstrate the existence and purpose of AI in a system, and (in development with publication expected in Q4-2023) ETSI GR SAI 010 – Traceability of AI Models. The former identifies its target audience as designers and implementers who are making assurances to a lay person such that designers are able to “show their working” (explicability) and to be “open to examination” (transparency).

The most recent publications of ETSI’s ISG SAI have continued the deep dive into the challenges of AI and specifically address topics of testing, AI based manipulation, and collaborative AI.

AI testing is a relatively new direction and is being addressed on two fronts at ETSI. In ETSI’s TC MTS the specific testing of AI is being looked at in order that normative test suites can be written in a consistent manner. In ISG SAI two approaches are being taken. In ETSI GR SAI 013 “Proofs of Concepts Framework” the aim is to develop a framework that can be used to as a tool to demonstrate the applicability of ideas and technology. The second thread is seen in ETSI GR SAI 003 ” Security Testing of AI” which identifies methods and techniques that are appropriate for security testing of ML-based components. The document addresses

•security testing approaches for AI

•security test oracles for AI

•definition of test adequacy criteria for security testing of AI.

Most recently ETSI’s ISG SAI has published ETSI GR SAI 011 addressing the Automated Manipulation of Multimedia Identity Representations. This gets down into the detail of many of the more immediate concerns raised by the rise of AI, in particular the use of AI-based techniques for automatically manipulating identity data represented in different media formats, such as audio, video and text (deepfakes). By analysing the approaches used the work aims to provide the basis for further technical and organisational measures to mitigate these threats and discusses their effectiveness and limitations.

In summary the work of ETSI’s ISG SAI has been to rationalise the role of AI in the threat landscape, and in doing so to identify measures that will lead to safe and secure deployment of AI alongside the population that the AI is meant to serve.

Scott Cadzow

Scott Cadzow, Vice Chair of ETSI Encrypted Traffic Integration Industry Specification Group, participant to ETSI Technical Committee CYBER.